Language is infinite, Your product should not be

Over the past few years I have been directly involved in developing GitHub Copilot, working on different parts of the tooling and grappling with the ethics and implications of these amazing AI models and their confounding abilities and limitations which constantly seem to be unveiling themselves to us.

When working on an LLM product there is an interesting consideration that is not present in other technologies, or at least not as prevalent. An LLM works in the domain of language and language can describe anything. This makes fitting the LLM to you product challenging as it will happily answer any query the user may offer regardless of the domain of your product. I have seen many people on social media identify they are chatting with an LLM simply by steering it way off topic and having a conversation having nothing to do with the service being offered. Language is an infinite tool of expression, but your product is definitely not.

In this exploration of the infinite, I started thinking about the nature of LLMs. Since they work in the area of human intelligence they are with regards to capabilities starting to play in the realm of the infinite. Naturally LLMs are resource constrained by memory, gpu size, their power consumption, but the tools and problems we have exposed to them are in theory limitless. We need a way to express the limitations of the system we want to impose on the LLMs which could be infinite. I have started using the language Breadth to capture this idea.

By breadth I mean an interface’s ability to span multiple, disjoint problem domains without forcing the user into different apps or workflows.

Why Infinite Matters

Until recently all software had a problem domain space, search engines for information retrieval, IDE’s for writing software etc.. The tools around this product were limited in capabilities, they had no ability to express concepts outside of what we built into the system. Now engineering seems to be at an interesting fork in the road for product design, as the tools we are working with have much more domain knowledge built into them then just our designed space.

Increasingly users are using an LLM’s for everything. Conversational interfaces like Chatgpt with built in tools are very capable of doing many different tasks, further enhanced by the explosion of MCP servers, agent to agent systems, etc.. This ties directly into the idea of language being infinite and expands this idea to action. If I can express any idea to a chat server and get it to respond and use tools to solve my problem for me, are we on the verge of a singular interface into everything possible via a computer via language? What happens as we connect these machines to 3d printers or other physical world altering interfaces?

Model Limitations

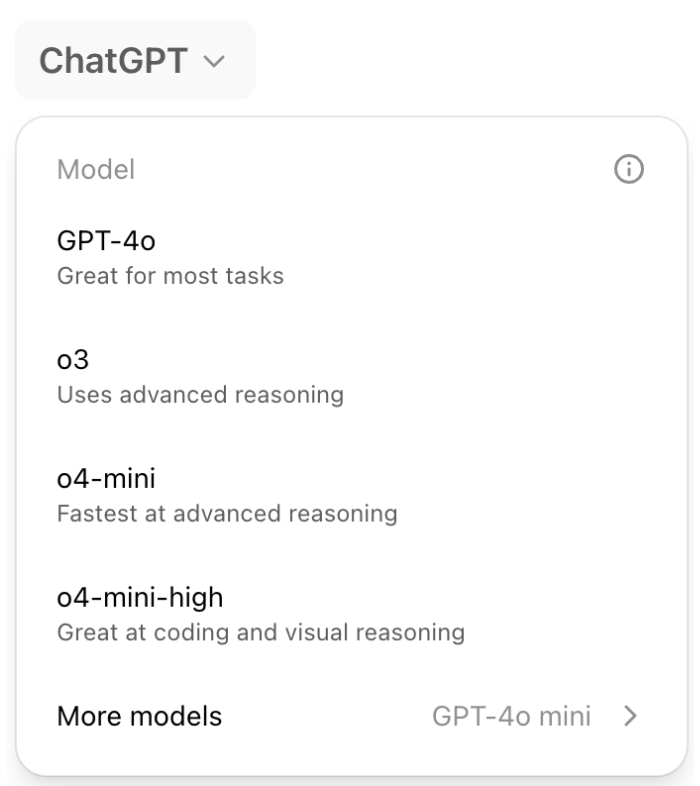

The sheer size of the problem space is proving to be problematic, while language is infinite it is becoming clear current models are not infinite. Instead model’s are constantly coming out which are constrained in different ways. Some models are specialized for tasks, others for other quality metrics such as performance. Our lack of tools around dealing with the ambiguity of infinite language is running directly into our lack of tools to handle the finite nature of models themselves. We have started collecting a whole new epidemic of bad design patterns to deal with it, one of my least favorite is the model selector

Here we are presented with 4 models each with very closely related descriptions, and more models yet. Navigating the ambiguity of which of these options is best for my task is difficult and requires a high understanding of the models capabilities. Also of concern is the absolute lack of knowledge of the models training data, placing a high level of trust on the model provider to offer a robust training data set captured correctly for the particular domain I am hoping to explore, which is ambiguous to the model provider.

This is really just the tip of the iceberg. Each of our tool sets that we build into our LLM’s are expanding the problem. Ideas are emerging like custom GPT’s that help align the model into a certain context via a custom prompt or a rag system, but it is easy to go outside the desired boundaries of these systems and diverge into a world of weird interactions, especially given the ambiguity of language. For example I recently had a conversation with a Physical trainer chatbot, forgot I was in it and my kid wanted an explanation of how weight works, when I prompted the LLM with this question it gave me an answer based on how the body can gain or lose weight, we really were interested in gravity.

LLM Based Products

I do not think that a single LLM alone can create limitless products, for a few reasons. An LLMs eager nature to respond no matter what can make them truly capable of making expansive products in ways that can make our head swim and lead to real blurry product edges, but I don’t think at this time infinite capabilities are something we can architect for. If you are reading this you are probably relieved.

As we have already seen, the context awareness of the LLM is very important to the response. Another reason why we will not see LLM’s be expansive any time soon is that structuring and storing relevant data in a useful and understandable way has a very real role in systems and will limit the infinite machinery or usefulness of solely language based tools.

Now this only limits those products which are LLM forward, unless they truly want to try to take on this expansive problem which would create some sort of uber monopoly. LLMs however in different environments present a uniquely different problem than just data storage and retrieval, because the environments in which they can exist are potentially infinite, or at minimum quite large.

Product edges

This puts us back into a product based world where LLMs are in a capacity offering an interface via language into the product domain of concern. In a product based world we want to try to keep the language use in bounds of the relevant conversations about the products or relevant services. In other cases such as broad use chatbots, the chatbot needs to broadly understand the context under which it is being used, who is it talking to, has the conversation changed at all since when it last left off? We also have to deal with people, and people are generally poor communicators, they often do not know what they want, or how to ask for it. This is why context is very important especially when considering the edges of conversational boundaries.

The Tradeoff of Domain size:

As a domain size that the model is dealing with grows, its training data must grow with it. Increasingly this seems hidden to us, as companies like Anthropic or OpenAI are bootstrapping most of this problem and offering Foundational Models. But how much of a domain to cover is a very real problem for them. The size of the pre-training for these FM’s is enormous and the compute and power needed to complete this training also enormous. To complicate matters when you get an FM and look to use it in your domain you have no idea if the model is good at the task you need to accomplish, unless you happen to have open benchmarks to reference, or if it even contains adversarial data to what you are looking to accomplish that may corrupt the LLM in transparent ways for you task or the users needs.

Interestingly, the FM models are rated based on open benchmarks. This practice is likely biasing model training towards the benchmarks themselves, and not necessarily towards the tasks that we want to accomplish with them. This is a problem as it can lead to models that are good at the benchmarks but not necessarily good at the tasks we want to accomplish.

FM models also make tradeoffs that are out of your control, such as tradeoffs of power consumption, latency or other performance characteristics that are required to train or inference the model on the sheer breadth/depth of the domains the model is seeking to contain in order to achieve a reasonable breadth of knowledge capture required to be a foundational model in the current landscape.

Products are not infinite

When we are building a product usually we don’t want to boil the ocean by allowing our users to do anything. The ability for foundational models to try to respond to essentially any request is a net negative for many product offerings. There is a time and a place to wax philosophically with your bartender or hairdresser and society encourages this sort of social interaction, but you likely do not want your airline chatbot talking to people about the philosophy of Plato. Foundational models will gladly do the job though.

A tricky thing though is that human language can context drift in and out of what seems like a logical progression. For example when talking to my personal trainer bot as I mentioned earlier I switched context to asking about how weight/gravity worked. These two contexts overlap in unexpected ways, and the bot answered based on its context, human performance, which is likely what we want from a product but maybe unexpected by the user. We can chalk it up to user error, but mis-intended use of context can create complex situations to reason about.

We have a few problems to deal with when designing for LLM’s

| Design Element | Problem | Tradeoff |

| Breadth | Context Drift | Clarity vs Flexibility |

| Prompt Specificity | MisInterpretation | Guidance vs creativity |

| Model Opacity | Mistrust | Training cost vs transparency |

With breadth we have to deal with context drift, or the ability to drift from one related topic to another. This means choosing between the flexibility of a user to change context vs the clarity of staying on what seems to be the topic or domain for the LLM. The challenge of doing this increases the longer a user continues a conversation, and managing the continuous conversation and staying on topic is a difficult problem space in ai.

With prompt specificity we have to decide how much we put in the prompt that attempts to answer the users question. If we guide an LLM a lot we are relying less on the LLM’s internal training data and ability to be creative, in return we hope to guide the model more towards what is considered correct in the domain.

Finally model opacity is tricky, we currently have very little insight into what makes up a LLM’s training data and we have to put a lot of trust into the trainers choices of what is truthful or appropriate. If we want to override behavior of an LLM we have a cost hurdle of either fine tuning the model or fully training a model ourselves. Radical open transparency is preferred but even then the amount of data these models take in is unwieldy for a human to reason about or guarantee accuracy of. To make matters worse, how do we determine the truthfulness of input, when we live in a world where most scientific research is not reproducible, and our politics are increasingly polarized and propaganda filled.

Are you an expert?

As a user, seeing a response like weight is based on what you eat and how much you exercise could be a very odd experience. Let’s face it we are constantly distracted, and we have very little insight into how the magic of AI works.

A lot of context goes into an AI’s answer. It could be from the training data, the prompt, RAG or some other method that an LLM ends up at its answer, the sources should be clear to increase trust with the system or in the worst case to understand how we ended up off topic. Every AI system should explain to you exactly what it is doing. In all other walks of life you would ensure you were working with an expert and not the guy down the street with the tinfoil hat. AI is trained off of so many sources, and may pull in data from sources that you do not trust. We should demand radical transparency and a system with clear boundaries of what it can do in a trustworthy way.

Recent efforts such as Explainable AI are pushing us in this direction and it can help your product direction and interactions with your users to invest in these efforts. Ultimately users trust explainable AI much more, studies show that AI models with explainable features see a 30% increase in user acceptance compared to black-box models.

Depth Not Breadth

In reality our society thrives on expert systems. We have experts in very small niches for example a pediatrician and an ophthalmologist are both doctors with depth in different fields. Products operate in the same way, the best products tend to have a narrow focus and deliver a lot of value or expertise in a specific problem area.

LLM’s and Foundation models break this mold. In seeking to understand human language and thinking patterns, they have encompassed a huge breadth of problem space. When moving a LLM into a problem space we want to transform this breadth based tool into a depth based field. This is being addressed in numerous ways today but remains a challenge that directly impacts user trust, societal health and product quality.

Boundaries matter

Very few things in the world attempt to handle the complexity of everything—and for good reason. Even language, for all its expressive power, has boundaries. The idea that language is infinite speaks to its potential, but the language we actually use is constrained by our current understanding of the world. It evolves with our curiosity, our discoveries, and our limits.

LLMs are no different. While they can recombine ideas in surprising and sometimes profound ways, they are still grounded in the boundaries of human knowledge. Their insights are shaped not by real-world exploration, but by the sum of what we’ve written, said, and shared so far. Like us, they are limited not just by data—but by perspective.

This is why boundaries matter—especially in product design. Every product has limits. Every intelligent system needs to know where it ends. The next wave of breakthroughs won’t come from removing boundaries, but from navigating them better—from designing interfaces that gracefully handle the blurry, shifting edges of human expression.

Because sometimes, the most intelligent thing a system can say is: “No” Or even better: “I don’t know.”